|

By David Archer (Newcastle University [JBA]) 16th December 2014 |

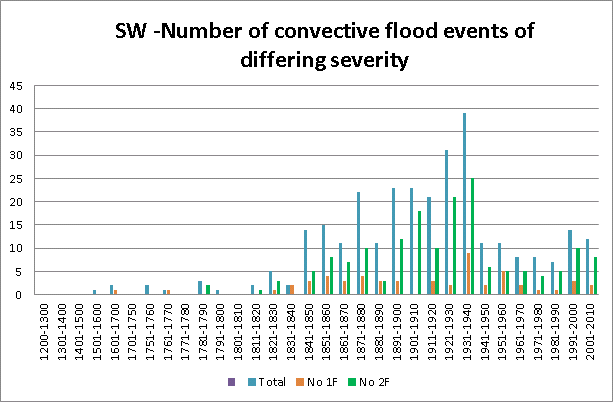

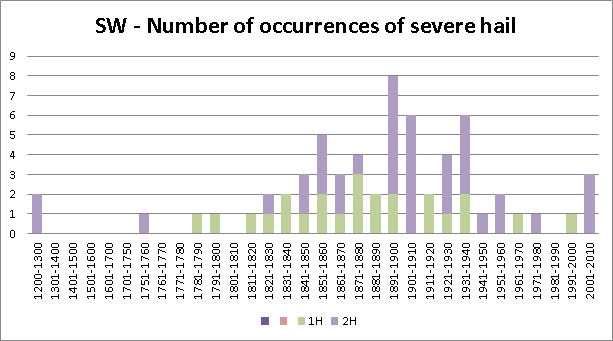

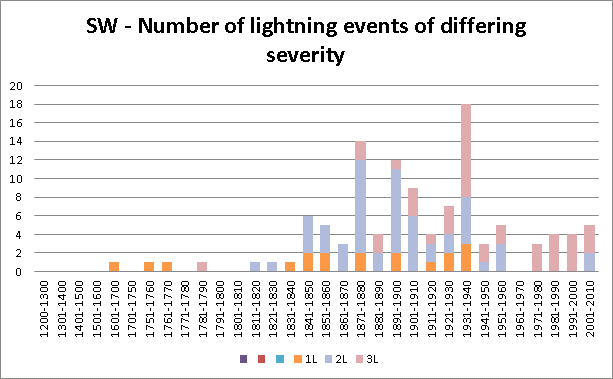

As a contribution to the SINATRA project, I have compiled a chronology of flash floods and categorised associated meteorological conditions and impacts. This has now been completed for three regions of England, the southwest, the northeast and the northwest. As the first step in analysis, I have produced time series of events by decades (but earlier by century). Figures below for Southwest England show the number of flash flood events of differing severity, the number of large hail events of differing magnitude or severity, and the number of lightning occurrences with differing severity of impact.

For the Southwest 301 flash flood events, where associated thunder was reported, were extracted from a total of over 450 events. Given the availability of newspaper sources, the lists can be considered comprehensive from at least 1850 and perhaps from 1820.

Perhaps the most striking feature of the flash flood series is the contrast between the low numbers of events in the second half of the twentieth century (within our own experience) and the number from 1850 to 1940 with a dip around 1900. The pattern and contrast is repeated with the occurrence of large hail and the impact of lightning. The time series for the northeast and northwest show very similar time distributions.

Are these all spurious and can flash flood variations all be explained by variation in reporting or changes in infrastructure (though this could work both ways, with improvements in urban drainage but increases in paved area). In the case of hail effects, changes in the strength of glass could explain part of the variation but not the observed frequency of occurrence. With respect to lightning, partial explanation could be made by improvements in lightning protection or in the number of people exposed to events.

Or are these variations real (my belief is yes) – if so how can you explain the meteorological basis? Comments welcome!

Convective flood events

The first graph is based on an extraction of events where thunder was reported in association with the event (hence convective). These were categorised in terms of the severity of the associated flooding:

1F for events where a large number of properties were flooded, most commonly from pluvial flooding before the runoff reached an established water course; 2F for events where a small number of properties was flooded.; Total is the sum of 1F and 2F events. I have excluded from this analysis those events where only road flooding was reported or where unspecified remarks were made of the occurrence of flooding.

Occurrences of severe hail

Where hail was reported in association with a flood event, the severity of the hail was categorised as follows:

1H Where the hail was of sufficient size and intensity to cause the breaking of glass in windows and skylights as well as in green houses; 2H Where large hail or ice was reported (often with weight diameter or circumference) but without reference to the breaking of glass. I have excluded from the analysis, occurrence of hail of normal size, even where it accumulated to a considerable depth.

Occurrence and effects of lightning

Three main categories of lightning effect and damage are noted:

1L where lightning strikes have caused the death of one or more people in the event; 2L where people have been struck and injured or animals have been killed by lightning; 3L where buildings have been struck. Reports in this category usually refer to damage caused to the buildings by the strike itself or an ensuing fire. I have excluded from the analysis events where lightning has been reported but no specific evidence of impact on people, animals or buildings is noted.