|

By Dr. Adrian Champion (University of Reading) 19th May 2014 |

One of the most important requirements to improving our prediction of flash floods is to know and understand what the atmospheric precursors are for a flash flood, i.e. what was the atmosphere doing that caused the flash flood to occur? As mentioned in an earlier post (Summer Intense Rainfall Events vs Winter Flooding, 28th January 2014, Adrian Champion) there are many problems associated with predicting flash floods, however the main issue when understanding the atmospheric precursors is the scale at which flash floods occur.

Flash floods, floods that last for less than a day, affect a very small area and are caused by weather systems that are only a few 10s of km in size. This makes them very difficult to predict and observe, with weather forecast models only recently able to predict systems of this size, and ground observations also being at this scale. Therefore to detect what atmospheric features cause flash floods is extremely challenging.

Atmospheric Rivers

Atmospheric Rivers are areas of high moisture convergence that, when present, stretch back from the UK coastline across the Atlantic for at least 2000 km and are present for at least 18 hours. This represents a significant amount of moisture that has the potential to fall as intense rain over the UK. Atmospheric Rivers have already been shown to be the cause of 60% of all the most extreme winter flooding events in the UK over the past 30 years.

Preliminary findings suggest that Atmospheric Rivers cannot be associated with summer flash flooding events. This is not particularly surprising as atmospheric rivers provide a continual supply of moisture over a large area for an extended period, this does not match with the scale or duration of flash floods. Therefore one of the questions being investigated by SINATRA is whether there is a common atmospheric feature that can be attributed to summer flash floods. This also requires an observational record of intense rain events.

Observing Intense Rain

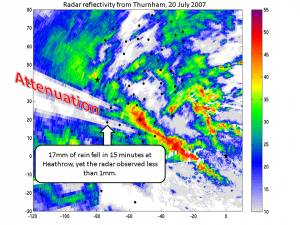

To be able to associate certain atmospheric features to summer flash floods we need to know when there was intense rain. Part of the work being undertaken at the Department of Meteorology, University of Reading is to create a record of when flash floods occurred using an observational dataset. One dataset being used is the raingauge network, a series of buckets that collect water and record every time 0.2 mm of rain is collected – known as tipping bucket raingauges. The advantage of this dataset is that it observes the amount of rain reaching the ground with a high degree of accuracy. The disadvantage of this dataset is that they are spread sporadically across the UK and are very susceptible to errors, either mechanical or due to local factors. These errors need to be addressed before the intensities can be used in observing the rain.

A further problem is calculating what intensity rainfall may lead to a flash flood. This is highly dependent on the ground conditions prior to the intense rain. One of the causes of the prolonged flooding over the winter was due to the ground becoming saturated, followed by a series of heavy rainfall events. The ground is still saturated from the winter flooding, and therefore even a relatively low-intensity rain event may cause flash flooding. The opposite is also a problem; last summer during the drought period, the ground was so dry that it was unable to absorb any rain, resulting in higher chance of flash flooding.

Summary

It is clear that associating flash floods with a common atmospheric feature is a complex task that has many problems. Whether or not an intense rain event causes a flash flood is dependent on the preceding ground conditions, where the rain falls (i.e. which catchment) and the period over which the rain falls. It is hoped, however, that by finding an atmospheric feature that is commonly associated with summer flash floods, the prediction of these events can be improved.

The left panel shows the radar rain rate at 1500 UTC on 20 September 2011. The right panel shows the control member forecast rain rate at the same time. The model fails to capture the second rain band.

The left panel shows the radar rain rate at 1500 UTC on 20 September 2011. The right panel shows the control member forecast rain rate at the same time. The model fails to capture the second rain band.