|

By Dr. Chris Skinner (University of Hull) 9th May 2014 |

The scientific conference is a vital way for scientists to meet and discuss their research with one another. They are an opportunity both for yourself to share your latest research, and receiving first hand feedback/criticism for it, and to catch up on the latest, and cutting edge research that is going on in other institutions. When it come to conferences, they do not get much bigger than the European Geosciences Union’s General Assembly, affectionately known as EGU.

EGU is an annual, weeklong conference held in Vienna, around Easter time. For many of us working as part of the FFIR programme, it is a vital fixture in our calendars, and that is simply because of its size. It is big, real big. To give you some figures taken from their website, the 2014 meeting was attended by 12,437 scientists from 106 countries, who presented 9,583 posters and gave 4,829 oral presentations. I was but one of 1,120 scientists from the UK. The advantage of it being so big is that a lot of people – important, clever and useful people – attend and they encompass a wide range of disciplines. It is fertile ground for new ideas and collaborations.

A 360° panoramic from outside the EGU (Austria Centre Vienna)

A typical day at EGU is a long one. The oral sessions begin at 8.30am and last for six 15 minute presentations before a half hour coffee break – where one can sample the delights of a Viennese Melange. Two sessions in the morning are followed by an hour and a half break for lunch. This is a chance to grab some food, peruse the several, large poster halls, and have a look around the displays in the foyer. These range from ESA, to representatives from scientific publishers, to companies promoting the equipment or services they have for sale. My personal favourite was from the Earth Engine team from Google, who were demonstrating their beta for an open source GIS – it is one to keep an eye on. After lunch there are a further two oral session blocks until 5.00pm, and these are followed by a two hour poster session where you have the opportunity to talk to the poster’s author. These are always lively and busy. From 7.00pm there are often further things to attend, such as meetings, workshops or debates – my week included a SINATRA team meeting and a function to celebrate the successful first year of the Earth Surface Dynamics open-access journal.

A session I found particularly interesting this year was the “Precipitation: Measurement, Climatology, Remote Sensing, Modelling” session. It featured several presentations regarding the development of the Global Precipitation Measurement mission (GPM), which aims to dramatically increase the coverage of satellite instrumentation that can directly detect rainfall and its relative intensity. The core satellite in the constellation launched earlier in 2014 and from the sessions it is clear that it is working well, and the indicators are that our ability to observe rainfall from orbit will be greatly improved. This might not have a huge impact on forecasting FFIR in the UK, where we are well served by ground recording instrumentation, but will have a big impact on areas that are not, such as South America and sub-Saharan Africa.

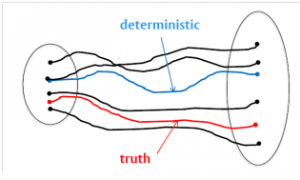

My favourite presentation of the week, however, was given by Massimiliano Zappa, from the Swiss Federal Research Institute. Zappa’s presentation was one of the invited presentations in the “Ensemble hydro-meteorological forecasting” session, titled “HEPS challenges the wisdom of the crowds: the PEAK-Box Game. Try it yourself!” The PEAK-Box approach is a method of better understanding and communicating the uncertainty around peak flood forecasts, by using a visual box representing the possible range of peak discharges from a forecast ensemble, and during the session Zappa had the audience make their own predictions (a bit like the old ‘Spot the Ball’ competitions). He predicted that, through the “wisdom of the crowd”, the average of the audience’s response should be close to the actual peak flood. I will let you know when he has finished collating and distributing the results whether or not it has worked!

This blog is just a mere flavour of the activities at EGU. For me, personally, the most rewarding and productive aspect is getting to meet, face to face, many of the people I will be working alongside in the SINATRA project, as well as many excellent scientists from around the globe that beforehand I had only ever communicated with on Twitter, or in the blogosphere. And if you get the chance to escape for a few hours, there is always the beautiful city of Vienna to explore.

View over the New Danube at sunset, close to EGU. It’s pretty nice.

You can follow Chris Skinner on Twitter: @cloudskinner

The left panel shows the radar rain rate at 1500 UTC on 20 September 2011. The right panel shows the control member forecast rain rate at the same time. The model fails to capture the second rain band.

The left panel shows the radar rain rate at 1500 UTC on 20 September 2011. The right panel shows the control member forecast rain rate at the same time. The model fails to capture the second rain band.