By: Jake Aylmer

The futures of Arctic and Antarctic sea ice are difficult to pin down in part due to climate model uncertainty. Recent work reveals different ocean behaviours that have a critical impact on sea ice, highlighting a potential means to constrain projections.

Since the late 1970s, satellites have monitored the frozen surface of the Arctic Ocean. The decline in Arctic sea ice cover—about 12% area lost per decade—is a striking and well-known signal of climate change. As well as long-term retreat of the sea ice edge, the ice is becoming thinner and more fragmented, making it more vulnerable to extreme weather and an increasingly precarious environment for human activities and polar wildlife. At the opposite pole, sea ice surrounding Antarctica has not, on the whole, changed significantly despite global warming—a conundrum yet to be fully resolved.

There is high confidence that Arctic sea ice will continue to retreat throughout the twenty-first century, but uncertainties remain in the specifics. For instance, when will the first ice-free summer occur? Such questions are inherently uncertain due to the chaotic nature of the climate system (internal variability). However, different climate models give vastly different answers ranging from the 2030s to 2100 or beyond, indicating a contribution of model biases in the projected rates of sea ice loss.

My co-authors and I are particularly interested in the role the ocean might play in setting such model biases. Studies show that the ocean circulation has a strong influence on sea ice extent in models and observations, associated with its transport of heat into the polar regions (e.g., Docquier and Koenigk, 2021). If there is variation in this ocean heat transport across climate models, this could have a knock-on effect on the sea ice and thus help explain uncertainties in future projections. To explore this, we must first understand how the relationship between the ocean heat transport and sea ice occurs.

We looked at simulations of the pre-industrial era, which exclude global warming and thus act as control experiments isolating natural, internal variability. In all models examined, when there is a spontaneous increase in net ocean heat transport towards the pole, there is a corresponding decrease in sea ice area. This is intuitive—more heat, less ice. It occurs independently at both poles, but how the ocean heat reaches sea ice is different between the two.

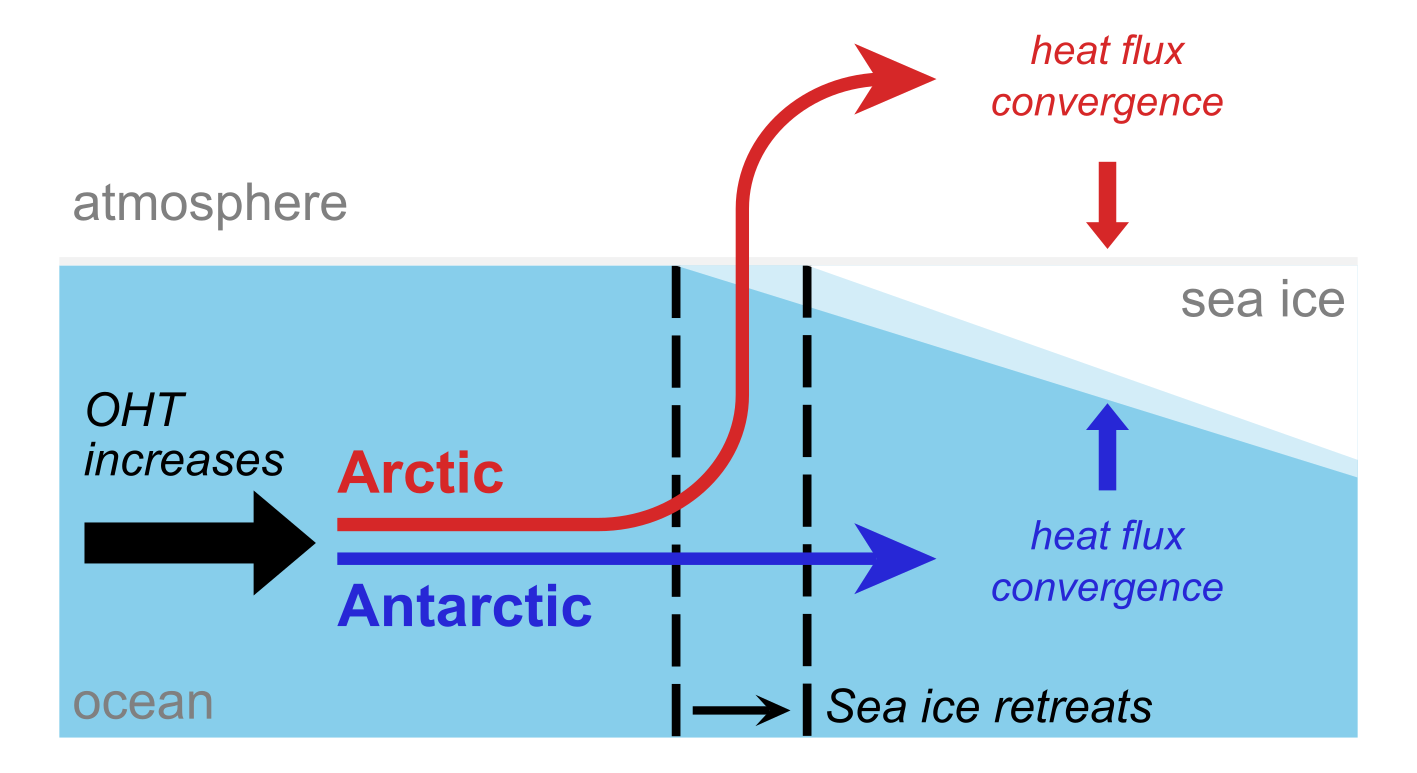

In the Arctic, the heat is released around the sea ice edge. It does not extend far under the bulk of the ice pack because there are limited deep-ocean routes into the Arctic Ocean, which is itself shielded from rising heat by fresh surface water. Nevertheless, the ocean heat transport contributes to sea ice melt nearer the north pole, assisted by atmospheric transport acting as a ‘bridge’ to higher latitudes. For Antarctic sea ice, the process is more straightforward with the heat being simply released under the whole sea ice pack—the Southern Ocean does not have the same oceanographic obstacles as the Arctic, and there is no atmospheric role (Fig. 1). These different pathways result in different sensitivities of the sea ice to changes in ocean heat transport, and are remarkably consistent across different models (Aylmer, 2021; Aylmer et al. 2022). Figure 1: Different pathways by which extra ocean heat transport (OHT) reaches sea ice in the Arctic (red) where it is ‘bridged’ by the atmosphere to reach closer to north pole, compared to the Antarctic (dark blue), where it is simply released under the ice. Schematic adapted from Aylmer et al. (2022).

Figure 1: Different pathways by which extra ocean heat transport (OHT) reaches sea ice in the Arctic (red) where it is ‘bridged’ by the atmosphere to reach closer to north pole, compared to the Antarctic (dark blue), where it is simply released under the ice. Schematic adapted from Aylmer et al. (2022).

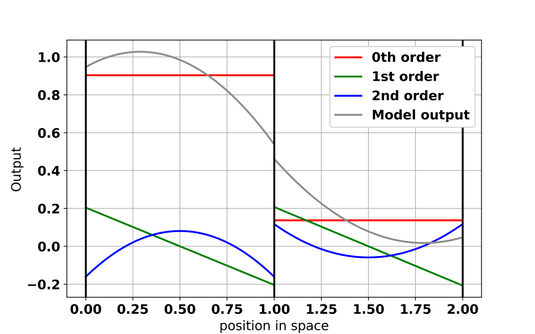

We can also explain how much sea ice retreat occurs per change in ocean heat transport using a simplified ‘toy model’ of the polar climate system, building on our earlier work developing theory underlying why sea ice is more sensitive to oceanic than atmospheric heat transport (Aylmer et al., 2020; Aylmer, 2021). This work, which is ongoing, accounts for the different pathways shown in Fig. 1, and we have shown it to quantitatively capture the climate model behaviour (Aylmer, 2021).

There is mounting evidence that the ocean plays a key role in the future evolution of Arctic and Antarctic sea ice, but questions remain open. For instance, what role does the ocean play in the sea ice sensitivity to global warming—something that is consistently underestimated by models (Rosenblum and Eisenman, 2017)? Our toy-model theory is currently unable to explore this because it is designed to understand the differences among models, not their offset from observations. As part of a new project due to start in 2023, we will adapt it for this purpose and include more detailed sea ice processes that we hypothesise could explain this bias. As more ocean observations become available, it is possible that our work could help to constrain future projections of the Arctic and Antarctic sea ice.

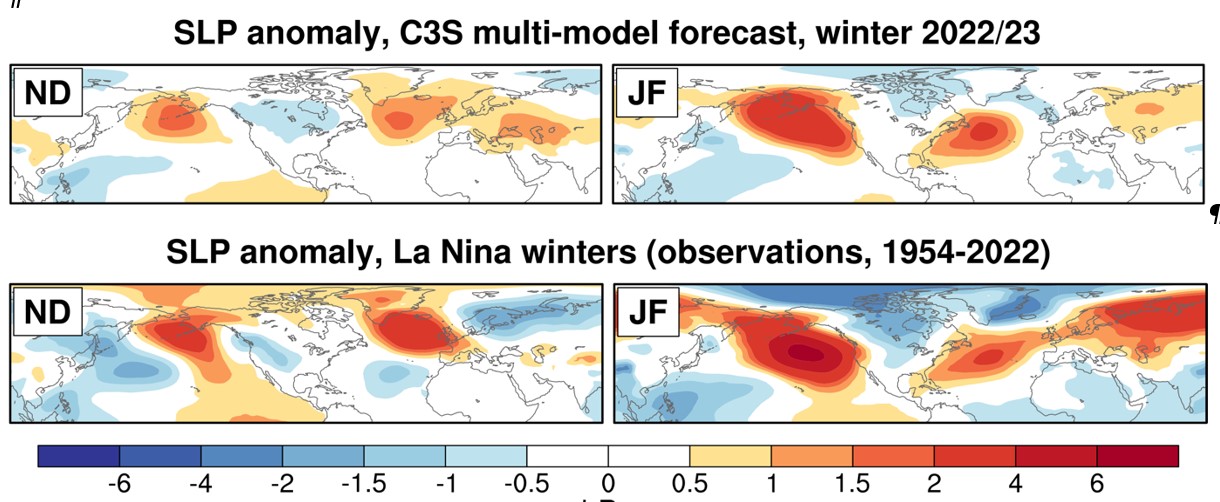

Figure 1: (Top) Forecasts of sea-level pressure (SLP) anomaly for the early winter (ND) and late winter (JF) from the C3S multi-model forecasts, initialised at the start of September. (Bottom) Observational SLP anomalies for the early winter (ND) and late winter (JF) during La Nina winters with respect to other years (1954-2022).

Figure 1: (Top) Forecasts of sea-level pressure (SLP) anomaly for the early winter (ND) and late winter (JF) from the C3S multi-model forecasts, initialised at the start of September. (Bottom) Observational SLP anomalies for the early winter (ND) and late winter (JF) during La Nina winters with respect to other years (1954-2022).

Figure: Updraft pulses detected using Doppler radar retrievals for a cumulonimbus cloud. Each panel shows part of a scan with time indicated at the top, horizontal distance on the x-axis and height on the y-axis. Colours show eddy dissipation rate, a measure of turbulence intensity, with red indicative of the most intense turbulence, using the method from Feist et al. (2019). Contours show vertical velocity and arrows indicate the wind field, using a method adapted from Hogan et al. (2008). The dotted line across the panels indicates a vertical motion of 10 meters per second. Adapted from

Figure: Updraft pulses detected using Doppler radar retrievals for a cumulonimbus cloud. Each panel shows part of a scan with time indicated at the top, horizontal distance on the x-axis and height on the y-axis. Colours show eddy dissipation rate, a measure of turbulence intensity, with red indicative of the most intense turbulence, using the method from Feist et al. (2019). Contours show vertical velocity and arrows indicate the wind field, using a method adapted from Hogan et al. (2008). The dotted line across the panels indicates a vertical motion of 10 meters per second. Adapted from

Figure 2: Timing of the diurnal maximum precipitation in the 2km model simulations (left panel) and the 12km model simulations (middle panel). Precipitation anomaly composites in Phase 5 of the MJO in the 2km model (top right) and the 12km model (bottom right).

Figure 2: Timing of the diurnal maximum precipitation in the 2km model simulations (left panel) and the 12km model simulations (middle panel). Precipitation anomaly composites in Phase 5 of the MJO in the 2km model (top right) and the 12km model (bottom right).

Figure 1: Matt Taylor presenting the radar on the BBC (image source: BBCbreakfast)

Figure 1: Matt Taylor presenting the radar on the BBC (image source: BBCbreakfast) Figure 2: New Weather Radar Network infographic (image source: MetOffice)

Figure 2: New Weather Radar Network infographic (image source: MetOffice) Figure 3:Heavy rain missed by radar in July 2007.

Figure 3:Heavy rain missed by radar in July 2007.